Updated 2020-05-01: Added /dev/dri mount point in LXC container.

Recently I needed a GPU inside a LXC container. There are some good tutorials already however I found it difficult. I’m writing this post to serve as documentation for future reference.

Parts

- Proxmox 6.1

- Arch Linux Container

- Nvidia Quadro K620

Proxmox Host

Ensure the system is up to date:

apt-get update

apt-get dist-upgradeAdd the pve-no-subscription repository to get access to pve-headers, within /etc/apt/sources.list:

# PVE pve-no-subscription repository provided by proxmox.com,

# NOT recommended for production use

deb http://download.proxmox.com/debian/pve buster pve-no-subscriptionInstall pve-headers:

apt-get update

apt-get install pve-headersEnsure your graphics card is present:

lspci | grep -i nvidiaYou should see some output like the following:

08:00.0 VGA compatible controller: NVIDIA Corporation GM107GL [Quadro K620] (rev a2)Next install the Nvidia drivers. These exists in buster-backports, therefore add the following to /etc/apt/sources.list:

deb http://deb.debian.org/debian buster-backports main contrib non-free

deb-src http://deb.debian.org/debian buster-backports main contrib non-freeInstall the Nvidia driver and SMI package (you must specify buster-backports when installing):

apt-get update

apt-get install -t buster-backports nvidia-driver nvidia-smiEnsure you load the correct kernel modules. /etc/modules-load.d/nvidia.conf should contain the following modules (in my case nvidia-drm was already present):

nvidia-drm

nvidia

nvidia_uvmCreate /etc/udev/rules.d/70-nvidia.rules and populate with:

# Create /nvidia0, /dev/nvidia1 … and /nvidiactl when nvidia module is loaded

KERNEL=="nvidia", RUN+="/bin/bash -c '/usr/bin/nvidia-smi -L && /bin/chmod 666 /dev/nvidia*'"

# Create the CUDA node when nvidia_uvm CUDA module is loaded

KERNEL=="nvidia_uvm", RUN+="/bin/bash -c '/usr/bin/nvidia-modprobe -c0 -u && /bin/chmod 0666 /dev/nvidia-uvm*'"These rules are required to:

- Set more permissive permissions

- Enable nvidia_uvm which isn’t started by default (at least for my card it seems)

Reboot the host and check the output of ls -al /dev/nvidia* and /dev/dri/* is similar to below:

crw-rw-rw- 1 root root 195, 0 Feb 11 18:11 /dev/nvidia0

crw-rw-rw- 1 root root 195, 255 Feb 11 18:11 /dev/nvidiactl

crw-rw-rw- 1 root root 195, 254 Feb 11 18:11 /dev/nvidia-modeset

crw-rw-rw- 1 root root 236, 0 Feb 11 18:11 /dev/nvidia-uvm

crw-rw-rw- 1 root root 236, 1 Feb 11 18:11 /dev/nvidia-uvm-tools

crw-rw---- 1 root video 226, 0 May 1 17:43 /dev/dri/card0

crw-rw---- 1 root video 226, 1 May 1 17:43 /dev/dri/card1

crw-rw---- 1 root render 226, 128 May 1 17:43 /dev/dri/renderD128Take note of the numbers in the fifth column above 195, 236 and 226 respectively. These are required later on.

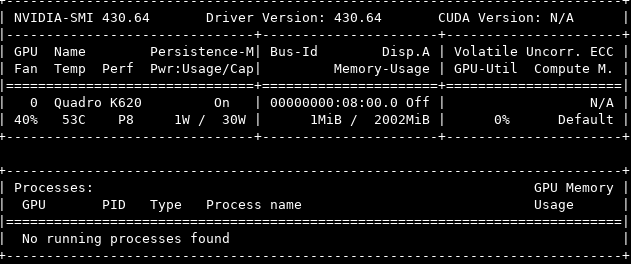

Additionally you can check the nvidia card is recognised and the drivers are working using the nvidia-smi command which should show something similar:

LXC Container

Next we need to install the Nvidia drivers inside the container. It is important that the exact Nvidia driver versions match between the Proxmox host and the container.

Debian uses an older Nvidia driver 430.64, therefore I had to manually install the Nvidia driver inside my Arch Linux container.

Download the required driver (changing version numbers in the URL does work):

wget http://us.download.nvidia.com/XFree86/Linux-x86_64/430.64/NVIDIA-Linux-x86_64-430.64.runExecute the install without the kernel module:

chmod +x NVIDIA-Linux-x86_64-430.64.run

sudo ./NVIDIA-Linux-x86_64-430.64.run --no-kernel-moduleNow the drivers are installed, we need to passthrough the GPU. Shutdown the container and add the following to your container configuration /etc/pve/lxc/###.conf making sure to change the numbers we recorded earlier if they differ:

lxc.cgroup.devices.allow: c 195:* rwm

lxc.cgroup.devices.allow: c 236:* rwm

lxc.cgroup.devices.allow: c 226:* rwm

lxc.mount.entry: /dev/nvidia0 dev/nvidia0 none bind,optional,create=file

lxc.mount.entry: /dev/nvidiactl dev/nvidiactl none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-uvm dev/nvidia-uvm none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-modeset dev/nvidia-modeset none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-uvm-tools dev/nvidia-uvm-tools none bind,optional,create=file

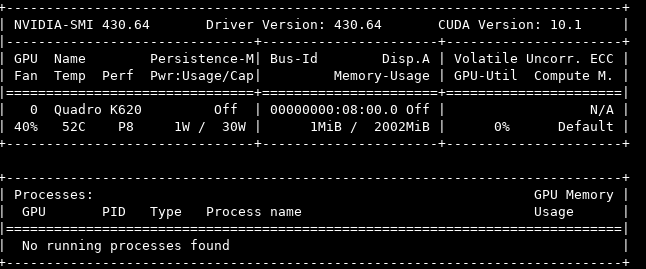

lxc.mount.entry: /dev/dri dev/dri none bind,optional,create=dirStart the container and confirm the passthrough worked by executing ls -al /dev/nvidia* and ls -al /dev/dri/*. Additionally nvidia-smi should now show you an identical result to the Proxmox host:

You have now successfully setup GPU passthrough for an LXC container.

Notes

- For some reason

nvidia-smidoes not return aCUDA Versionon the Proxmox host nvidia-smioutputs the name of processes using the GPU however this list is blank when executed from within the container- This setup is somewhat fragile as any update to a Nvidia driver that causes a version mismatch will break the passthrough. I have seen other posts talk about pinning / ignoring Nvidia driver updates via apt but have not set that up as yet.

Hopefully this will help someone in future. If you haven’t looked into Proxmox before I suggest you do.

Reference materials: